Political Economy of Reinforcement Learning (PERLS) Group

You are viewing the archive of the 2021 PERLS workshop, which was held on Tuesday, December 14th 2021 as part of the NeurIPS conference

Overview

Sponsored by the Center for Human-Compatible AI at UC Berkeley, and with support from the Simons Institute and the Center for Long-Term Cybersecurity, we are convening a cross-disciplinary group of researchers to examine the near-term policy concerns of Reinforcement Learning (RL). RL is a rapidly growing branch of AI research, with the capacity to learn to exploit our dynamic behavior in real time. From YouTube’s recommendation algorithm to post-surgery opioid prescriptions, RL algorithms are poised to permeate our daily lives. The ability of the RL system to tease out behavioral responses, and the human experimentation inherent to its learning, motivate a range of crucial policy questions about RL’s societal implications that are distinct from those addressed in the literature on other branches of Machine Learning (ML).

We began addressing these issues as part of the 2020 Simons Institute program on the Theory of Reinforcement Learning, and throughout 2020/21 we have been broadening the discussion through an ongoing reading group, including perspectives from Law and Policy. The aim of this workshop will be to establish a common language around the state of the art of RL across key societal domains. From this examination, we hope to identify specific interpretive gaps that can be elaborated or filled by members of our community. Our ultimate goal will be to map near-term societal concerns and indicate possible cross-disciplinary avenues towards addressing them.

Call for Papers

We are inviting papers from the fields of computer science, governance, law, economics, and game theory within the following research tracks:

- Vagueness and specification. RL methods require a specification of actions, observations, and rewards which condense vague real-world systems into a form suitable for our algorithms. However, this specification makes impactful choices which give an underlying sense of normative indeterminacy -- the lack of prior standards or guidelines for how a given system ought to perform in simulation or post-deployment. Technical and policy discussions related to optimization and control often fail to address this more basic, irreducibly sociotechnical problem. The saliency of vagueness is likely to increase as RL capabilities expand, allowing designers to intervene at scales that were previously inaccessible even to governments and corporations. The following questions seem in scope: How might designers make sense of emergent system behaviors that are difficult to evaluate? Whose expertise or judgment is needed to better evaluate such behaviors either within or across domains? In what ways and contexts can the task of RL specification actually help refine the understanding of a given problem domain?

- Legitimacy, Accountability, and Feedback. The maturation of RL naturally raises questions about who should have the ability to oversee these systems and evaluate performance over time. In terms of how an agent learns to navigate a given environment proficiently, RL may in fact automate many feedback mechanisms for oversight and control that governmental institutions presently enforce or take for granted. Such determinations lie at the heart of modern conceptions of political sovereignty. We ask: How might preferred optimization techniques bear on existing normative concerns (e.g. data privacy)? To what extent do stakeholders have a voice in system evaluation? How can choices about how to structure computation be brought into alignment with legal conceptions of rights and duties?

- Tools for democratization. There is growing concern that the successful and safe integration of advanced AI systems into human societies will require democratic control and oversight of those systems themselves. At present there are many proposed standards, guidelines, laws, governance mechanisms, and regulations that could be leveraged to enact such democratization, as well as organizational theories (e.g. distributed expertise) that suggest how it should proceed. But it remains unclear what forms of inquiry, evaluation, and control are warranted or ought to be prioritized to deal with the challenges of RL in particular domains. We invite papers that propose new policy tools (technical or otherwise) in the context of RL, as well as explicitly normative contributions to the ongoing debate about what is at stake in democratization.

We encourage a broad interpretation of the problem spaces outlined in this call, and would urge researchers broadly interested in the topic of RL and society to consider submitting a contribution, even if it does not explicitly fall within the topics proposed above.

Accepted Papers

This year we had six paper submissions, of which five were accepted for poster presentations during the workshop. You can download a bibtex file for all accepted papers here.

The accepted papers are listed below in alphabetical order of the first author’s surname.

-

Chapman, M., Scoville, C., & Boettiger, C. (2021). Power and Accountability in RL-driven Environmental Policy. Workshop on the Political Economy of Reinforcement Learning Systems. Neural Information Processing Systems.

https://openreview.net/forum?id=6OnoKEFVD_G -

Chong, A. (2021). Deciding What’s Fair: Challenges of Applying Reinforcement Learning in Online Marketplaces. Workshop on the Political Economy of Reinforcement Learning Systems. Neural Information Processing Systems.

https://openreview.net/forum?id=KB5Kv85Mzg1 -

Eschenbaum, N., & Zahn, P. (2021). Robust Algorithmic Collusion. Workshop on the Political Economy of Reinforcement Learning Systems. Neural Information Processing Systems.

https://openreview.net/forum?id=BztUFukcBry -

Holtman, K. (2021). Demanding and Designing Aligned Cognitive Architectures. Workshop on the Political Economy of Reinforcement Learning Systems. Neural Information Processing Systems.

https://openreview.net/forum?id=bFuvcB4IeQO -

Hu, Y., Zhu, Z., Song, S., Liu, X., & Yu, Y. (2021). Calculus of Consent via MARL: Legitimating the Collaborative Governance Supplying Public Goods. Workshop on the Political Economy of Reinforcement Learning Systems. Neural Information Processing Systems.

https://openreview.net/forum?id=qNAeKJKftJy

Confirmed speakers and panelists

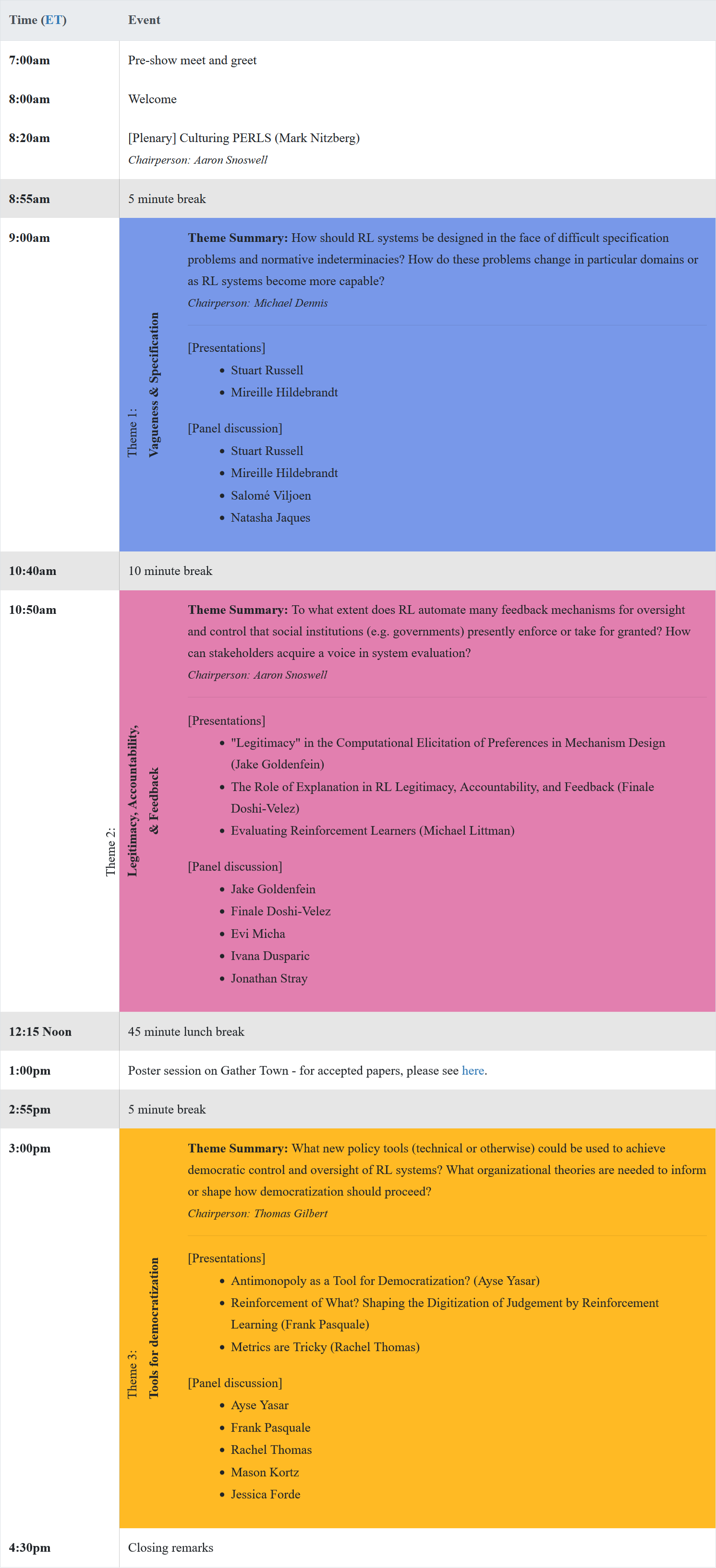

Schedule